Spooky or spectacular – depending on your level of AI suspicions – a study has found that artificial intelligence is on a par with human experts when making medical diagnoses based on images

This is an edited version of an article first published in The Guardian

The potential for artificial intelligence (AI) in healthcare has caused excitement, with advocates saying it will ease the strain on resources, free-up time for doctor-patient interactions and even aid the development of tailored treatment. Last month the government announced £250m of funding for a new NHS artificial intelligence laboratory.

However, experts have warned that the latest findings are based on a small number of studies, as this field is littered with poor-quality research.

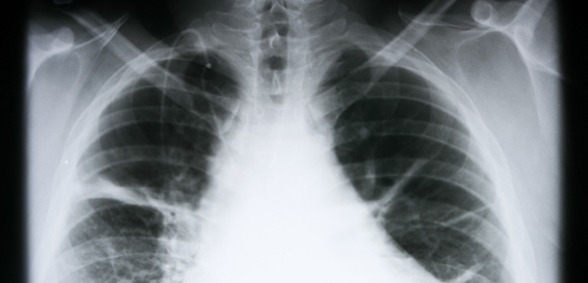

One burgeoning application of AI is its in interpreting medical images – a field that relies on a sophisticated form of machine training – called ‘deep learning’ – in which a series of labelled images are fed into algorithms that pick out features within them and ‘learn’ how to classify similar images. This approach has shown promise in the diagnosis of diseases from cancers to eye conditions.

However, questions remain about how such deep learning systems measure up to human skills. Now researchers say they have conducted the first comprehensive review of published studies on the issue, and have found human and machine accuracy rates to be almost identical.

Reality check for hype

Professor Alastair Denniston, of the University Hospitals Birmingham NHS foundation trust, and a co-author of the study, said the results were encouraging, but the study was a reality check for the hype around AI.

Dr Xiaoxuan Liu, the lead author of the study from the same NHS trust, agrees. “There are a lot of headlines about AI outperforming humans, but our message is that it can, at best, be equivalent.”

Writing in the Lancet Digital Health, Denniston, Liu and colleagues reported how they focused on research papers published post 2012 – a pivotal year for deep learning.

An initial search turned up more than 20,000 relevant studies. However, only 14 studies – all based on human disease – reported good quality data, tested the deep learning system with images from a separate dataset to the one used to train it, and showed the same images to human experts.

The team pooled the most promising results from each of the 14 studies to reveal that deep learning systems correctly detected a disease state 87% of the time – compared with 86% for healthcare professionals. AI correctly gave the all-clear 93% of the time, compared with 91% for human experts.

However, the healthcare professionals in these scenarios were not given additional patient information they would have in the real world which could steer their diagnosis.

“This excellent review demonstrates that the massive hype over AI in medicine obscures the lamentable quality of almost all evaluation studies,” Professor David Spiegelhalter, chair of the Winton Centre for Risk and Evidence Communication at the University of Cambridge, asserted.

“Deep learning can be a powerful and impressive technique, but clinicians and commissioners should be asking the crucial question: what does it actually add to clinical practice?”

Don’t forget to follow us on Twitter, or connect with us on LinkedIn!

Be the first to comment